As of late, artificial intelligence specialists have been fruitful in a scope of mind boggling game conditions. For instance, AlphaZero beat title holder programs in chess, shogi, and Pursue beginning with knowing something like the essential guidelines of how to play. Through Support Learning (RL), realize this single framework by playing many rounds through an iterative course of experimentation.

Yet, AlphaZero actually prepares independently for each game - basically unfit to gain proficiency with one more game or undertaking without rehashing the RL interaction without any preparation. The equivalent is valid for RL's different hits, like Atari, Catch the Banner, StarCraft II, Dota 2, and Find the stowaway. DeepMind's central goal of addressing insight to propel science and mankind incited us to investigate how we could defeat this restriction to make man-made intelligence specialists with more broad and versatile way of behaving. Rather than learning each game in turn, these clients will actually want to answer totally new circumstances and play an entire universe of games and undertakings, including those they have never seen.

Today, we distributed Open Learning Prompts Commonly Able Specialists, a preprint specifying our initial steps to preparing a specialist fit for playing various games without the requirement for human cooperation information. We established an immense gaming climate that we call XLand, which incorporates numerous multiplayer games inside predictable, human-like 3D universes. This climate empowers the definition of new learning calculations, which progressively control how the specialist is prepared and what games it is prepared on. A specialist's capacities work on much of the time because of difficulties that emerge in preparing, with the growing experience ceaselessly further developing preparation assignments so the specialist learns constantly. The outcome is a specialist with the capacity to prevail in a large number of errands - from straightforward item finding issues to complex games like find the stowaway and catch the-banner, not experienced during preparing. We find that the specialist shows general heuristic ways of behaving, for example, trial and error and ways of behaving that can be extensively applied to many undertakings as opposed to having some expertise in a solitary errand. This new methodology addresses a significant stage towards making more conventional specialists with the adaptability to adjust in always changing conditions rapidly.

A world of training tasks

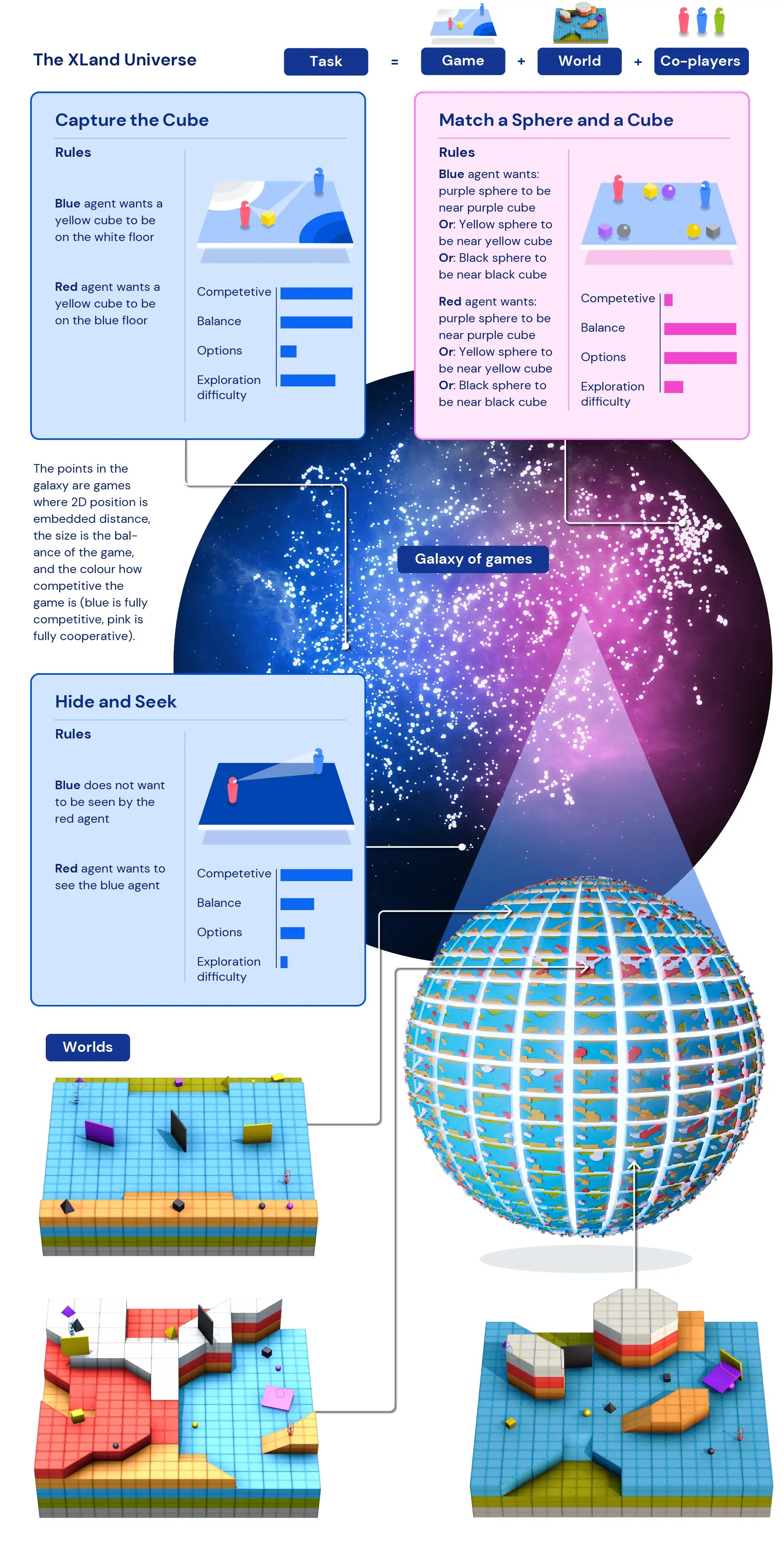

The lack of training data—where the ‘data’ points are different tasks—was a major limiting factor in the behavior of trained RL agents generally enough to be applied across games. Without being able to train the agents on a sufficiently wide range of tasks, the RL-trained agents were unable to adapt their learned behaviors to the new tasks. But by designing a simulation space to allow for procedurally generated tasks, our team created a way to train on and generate experience from programmatically generated tasks. This allows us to include billions of missions in XLand across diverse games, worlds and players.

Our AI agents inhabit 3D first-person avatars in a multiplayer environment intended to simulate the physical world. Players feel their surroundings by observing RGB images and receiving a text description of their target, and practice a range of games. These games are as simple as cooperative games of finding objects and navigating worlds, where the player’s goal can be to “be near the purple cube”. More complex games can be based on choosing from multiple reward options, such as “be near the purple cube or put the yellow ball on the red floor”, and more competitive games involve playing against participating players, such as the matching game of hide and seek where each player has The goal is, “See the opponent and make the opponent not see me.” Each game determines the rewards for the players, and the ultimate goal of each player is to maximize the rewards.

Because XLand can be selected programmatically, the game space allows data to be generated in an automated and algorithmic manner. And since missions in XLand involve multiplayer, the behavior of the players involved greatly affects the challenges the AI agent faces. These complex, nonlinear interactions create an ideal source of data to train with, because sometimes small changes in the components of the environment can lead to big changes in the challenges facing agents.

Training methods

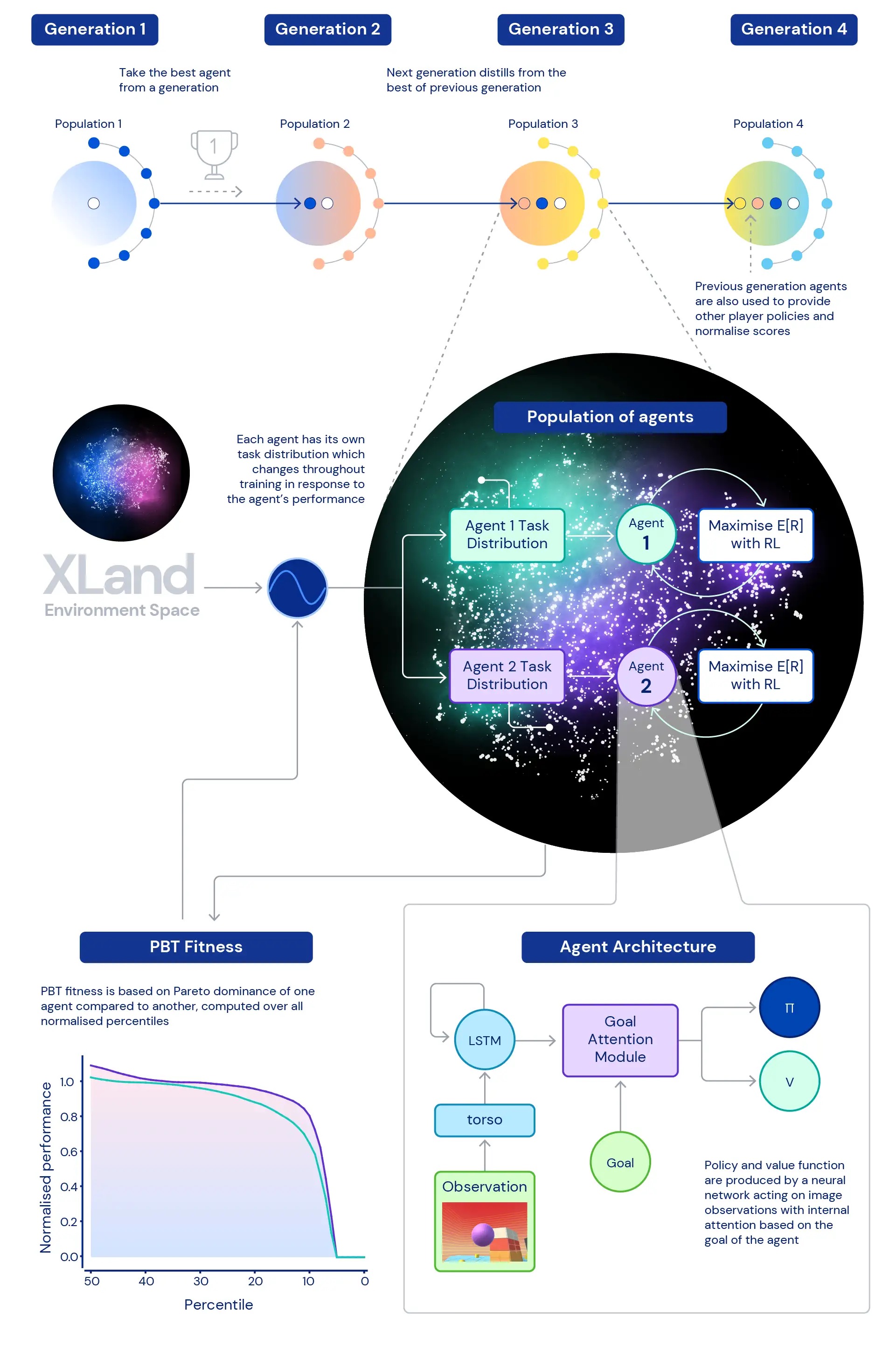

The focus of our research is the role of deep RL in training neural networks for our agents. The neural network architecture we use provides an attention mechanism on the agent’s internal recurring state – helping to orient the agent’s attention with estimates of sub-objectives unique to the game the agent is playing. We found that this GOAT agent learns more capable policies overall.

We also explored the question, what training assignment distribution would produce the best possible agent, especially in such a vast environment? The dynamic task generation we use enables continuous changes to the distribution of agent training tasks: each task is created so that it is neither too difficult nor too easy, but suitable for training. We then use population-based training (PBT) to adjust the criteria for creating dynamic tasks based on fitness aimed at improving the agents’ general ability. Finally, we chain several training courses together so that each generation of agents can boot to the previous generation.

This leads to a final training process with deep RL at the core updating neural networks for agents with each experience step:

- Experience steps come from training tasks that are generated dynamically in response to the agents’ behavior,

- The task-generating functions of agents change in response to the agent’s relative performance and strength,

- In the outer ring, generations of agents booting from each other provide players with a richer co-op environment, redefining the measure of progression itself.

The training process starts from scratch and iteratively builds complexity, constantly changing the learning problem to keep the agent learning. The iterative nature of a blended learning system, which improves not a limited measure of performance, but rather a recursively defined range of general ability, leads to an open-ended learning process for agents, limited only by the expression of the environment space and the agent neural network.

measure progress

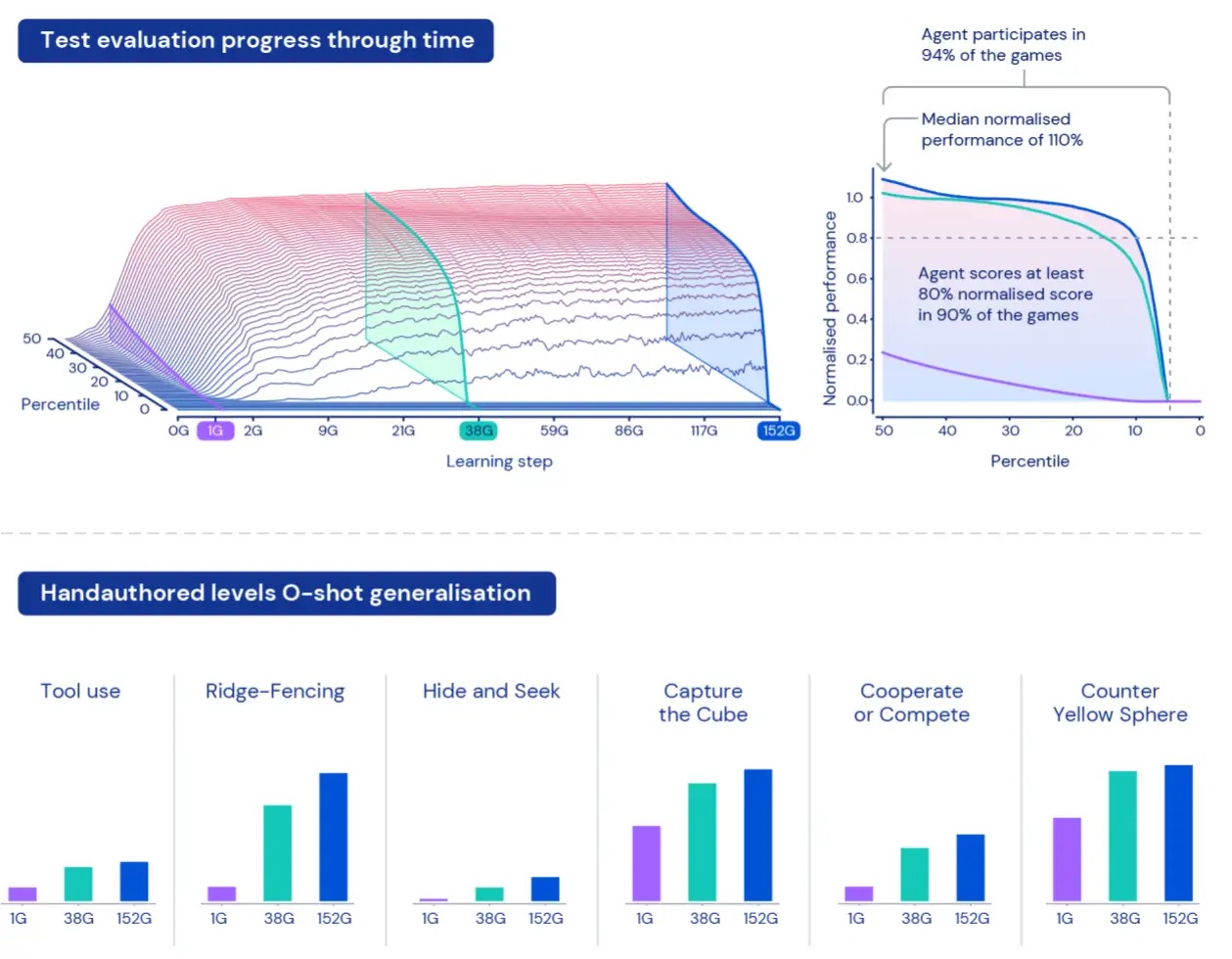

To measure the performance of agents in this vast universe, we create a set of assessment tasks using games and worlds kept separate from the data used for training. These “holding” tasks include specifically human-designed tasks such as hiding, searching, and capturing the flag.

Because of the size of the XLand, understanding and characterizing our dealer’s performance can be a challenge. Each mission includes different levels of complexity, different measures of achievable rewards, and different abilities of the agent, so simply averaging the reward over outstanding tasks would mask the actual differences in complexity and rewards – and would effectively treat all tasks as equally interesting. , which does not necessarily apply to procedurally generated environments.

To get around these limitations, we take a different approach. First, we normalize the scores for each task using a Nash equilibrium value calculated using our current set of trained players. Second, we consider the entire distribution of normative scores—instead of looking at the mean of the normative scores, we look at the different percentages of the normative scores—plus the percentage of tasks in which the agent scores at least one rewarding step: engagement. This means that an agent is not considered better than another agent unless it outperforms on all percentages. This approach to measurement gives us a useful way to assess our agents’ performance and strength.

Agents are generally able

After training our agents for five generations, we’ve seen consistent improvements in learning and performance across our evaluation space. Playing nearly 700,000 unique games in 4,000 unique worlds within XLand, each agent in the latest generation has encountered 200 billion training steps as a result of 3.4 million unique missions. At this time, our agents were able to participate in every procedurally generated assessment task except for a handful that were impossible even for a human. And the results we see clearly show an overall hit-free behavior across the task space – with the limits of normal hit percentages constantly improving.

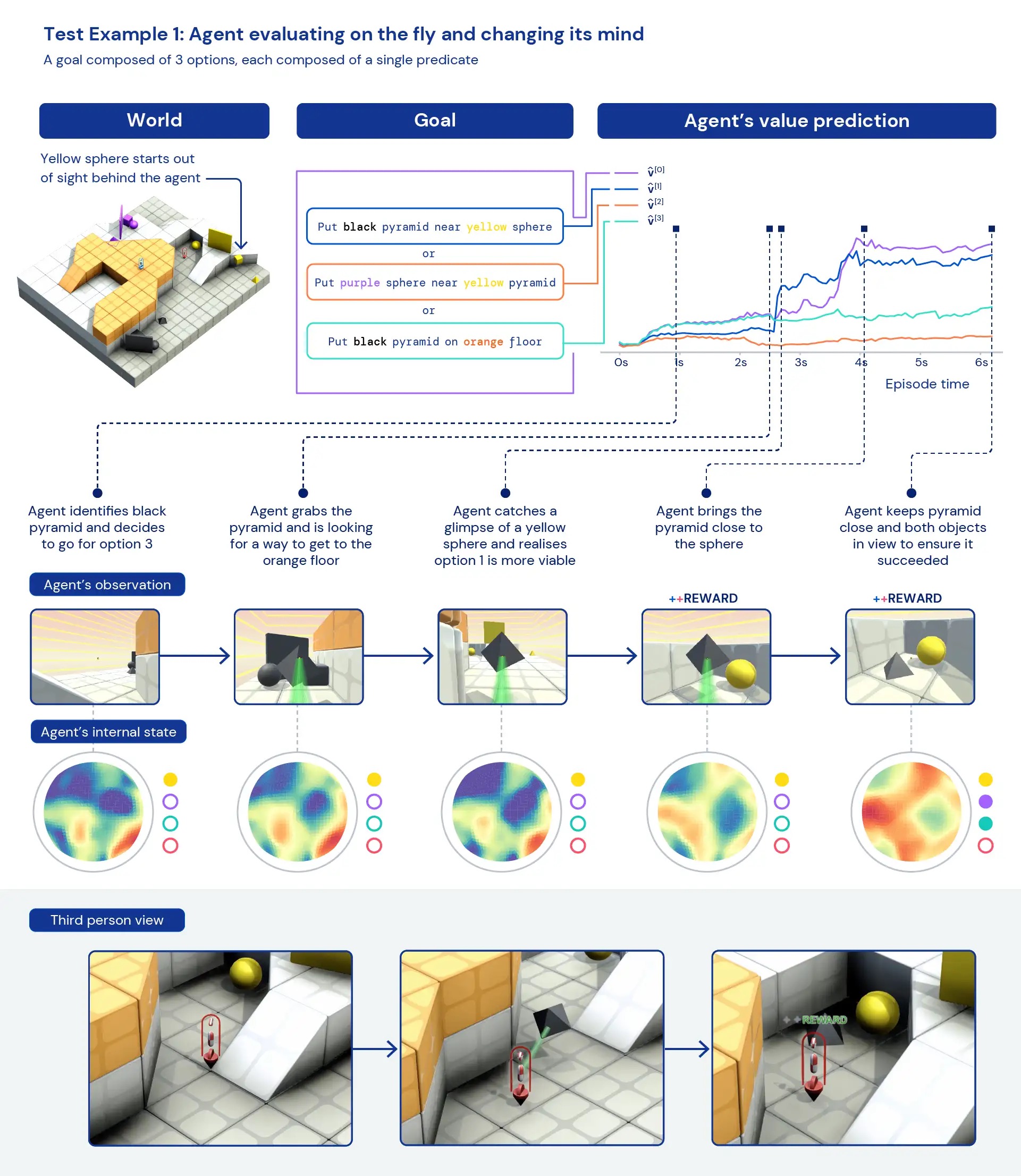

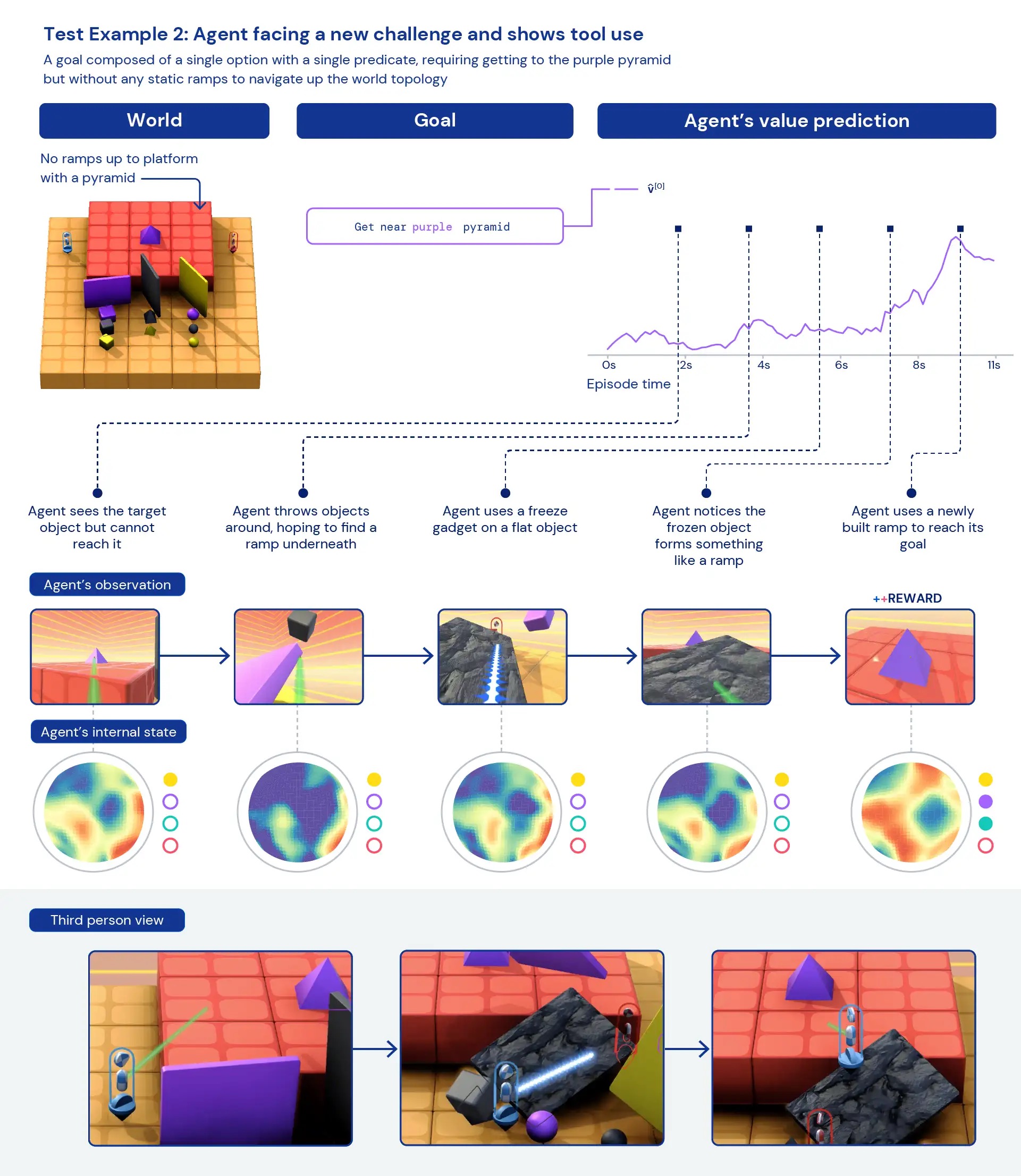

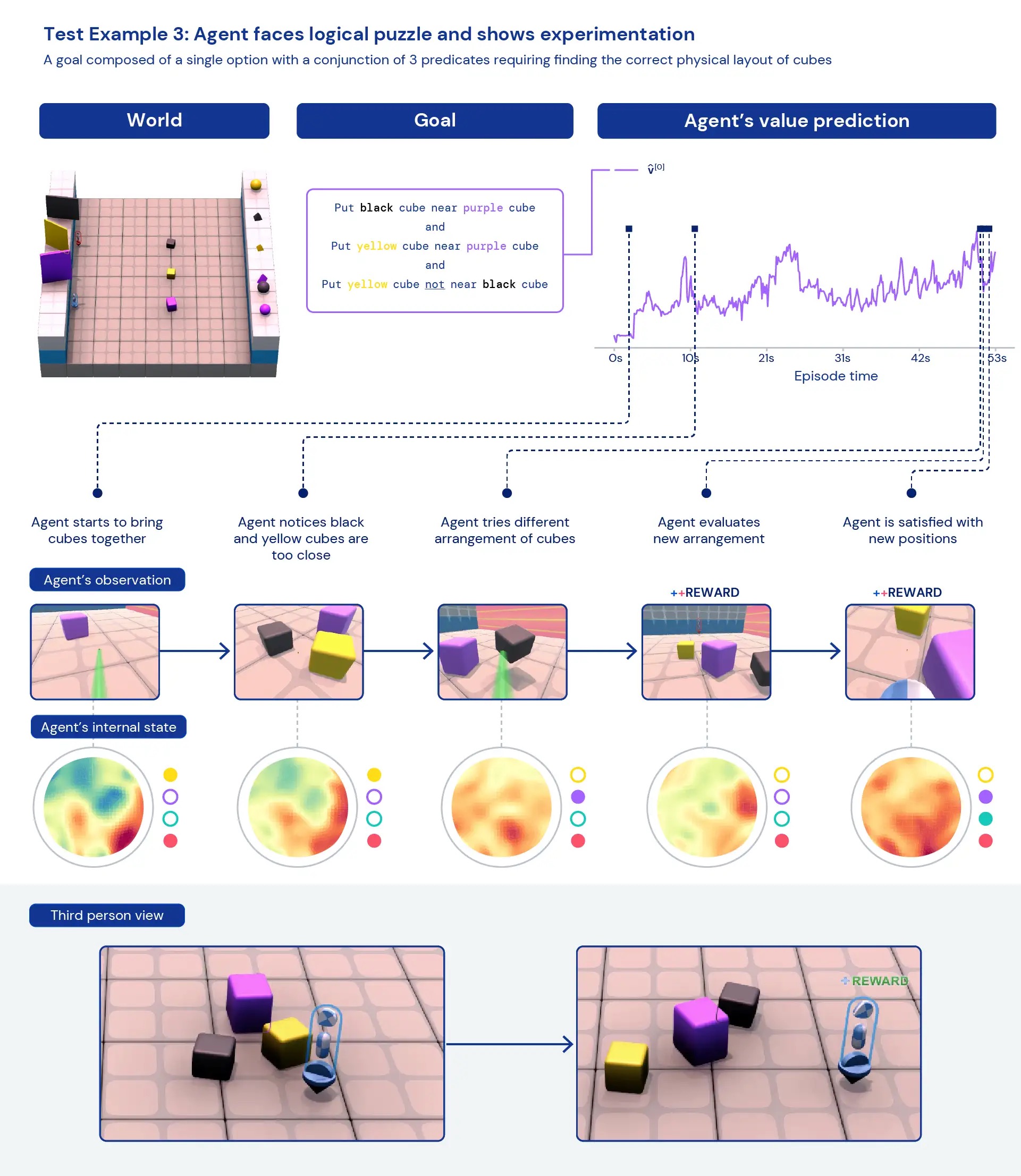

Looking at our agents qualitatively, we often see general prescriptive behaviors emerge—rather than specific, highly optimized behaviors for individual tasks. Instead of agents knowing exactly the “best thing” to do in a new situation, we see evidence of agents experimenting and changing the state of the world until they reach a rewarding state. We also see agents relying on other tools, including objects, to block vision, create ramps, and retrieve other objects. Because the environment is multiplayer, we can examine the evolution of agent behaviors during training for chronic social dilemmas, such as a game of ‘chicken’. As training progresses, our agents seem to exhibit more cooperative behavior when playing with a version of themselves. Because of the nature of the environment, it’s hard to pinpoint intention — the behaviors we see often seem incidental, but we still see them happen constantly.

By analyzing the agent’s internal representations, we can say that by taking this approach to reinforcement learning in a vast task space, our agents are aware of the fundamentals of their bodies and the passage of time and that they understand the higher-level structure of the games they encounter. Perhaps most interestingly, they are clearly aware of reward situations in their environment. This generalization and diversity of behavior in the novel tasks suggests that these agents can be tuned into the final tasks. For example, we show in the technical paper that with only 30 min of focused training on a newly presented complex task, agents can quickly adapt, whereas agents trained on RL from scratch cannot learn these tasks at all.

With the development of an environment like XLand and new training algorithms that support the open generation of complexity, we have seen clear signs of zero-fire generalization from RL agents. While these agents are beginning to be generally capable in this task space, we look forward to continuing our research and development to further improve their performance and create ever more adaptive agents.

For more details, see the preliminary version of our technical paper – and videos of the results we saw. Hopefully, this will help other researchers similarly see a new path toward creating more adaptive and generally capable AI agents. If you are excited about these developments, consider joining our team.